Quicksearch

Your search for Davis returned 26 results:

Sunday, December 8. 2013

OA's Real Battle-Ground in 2014: The One-Year Embargo

The prediction that "It is almost certain that within the next few years most journals will become [Delayed] Gold (with an embargo of 12 months)" is an extrapolation and inference from the manifest pattern across the last half-decade:

The prediction that "It is almost certain that within the next few years most journals will become [Delayed] Gold (with an embargo of 12 months)" is an extrapolation and inference from the manifest pattern across the last half-decade:1. Journal publishers know (better than anyone) that OA is inevitable and unstoppable, only delayable (via embargoes).The publishers' calculation is that since free access after a year is a foregone conclusion, because of Green mandates, it's better (for publishers) if that free access is provided by publishers themselves, as Delayed Gold, so it all remains in their hands (archiving, access-provision, navigation, search, reference linking, re-use, re-publication, etc.).

2. Journal publishers also know that it is the first year of sales that sustains their subscriptions. (The talk about later sales is just hyperbole.)

3. Publishers have accordingly been fighting tooth and nail against Green OA mandates, by lobbying against Green OA Mandates, by embargoing Green OA, and by offering and promoting hybrid Gold OA.

4. Although the majority of publishers (60%, including Elsevier and Springer) do not embargo Green OA, of the 40% that do embargo Green OA, most have a 1-year embargo.

5. This 1-year embargo on Green is accordingly publishers' reluctant but realistic compromise: It is an attempt to ward off immediate Green OA with minimal risk by trying to make institutions' and funders' Green mandates Delayed Green Mandates instead of Green OA Mandates.

6. Then, as an added insurance against losing control of their content, more and more publishers are releasing online access themselves, on their own proprietary websites, a year after publication: Delayed Gold

One-year delayed Gold is also being offered by publishers as insurance against the Green author's version taking over the function of the publisher's version of record.

(Publishers even have a faint hope that 1-year Gold might take the wind out of the sails of Green mandates and the clamor for OA altogether: "Maybe if everyone gets Gold access after a year, that will be the end of it! Back to subscription business as before -- unless the market prefers instead to keep paying the same price that it now pays for subscriptions, but in exchange for immediate, un-embargoed Gold OA, as in SCOAP3 or hybrid Gold…")

But I think most publishers also know that sustaining their current subscription revenue levels is a pipe-dream, and that all their tactics are really doing as long as they succeed is holding back the optimal and inevitable outcome for refereed research in the OA era for as long as they possibly can:

And the inevitable outcome is immediate Green OA, with authors posting their refereed, accepted final drafts free for all online immediately upon acceptance for publication. That draft itself will in turn become the version of record, because subscriptions to the publisher's print and online version will become unsustainable once the Green OA version is free for all.

Under mounting cancellation pressure induced by immediate Green OA, publishers will have to cut inessential costs by phasing out the print and online version of record, offloading all access-provision and archiving onto the global network of Green OA institutional repositories, and downsizing to just the provision of the peer review service alone, paid for -- per paper, per round of peer review, as Fair Gold (instead of today's over-priced, double-paid and double-dipped Fool's Gold) -- out of a fraction of each institution's annual windfall savings from their cancelled annual subscriptions.

So both the 1-year embargo on Green and the 1-year release of Gold are attempts to fend off the above transition: OA has become a fight for that first year of access: researchers need and want it immediately; publishers want to hold onto it until and unless they continue to be paid as much as they are being paid now. The purpose of embargoes is to hold OA hostage to publishers' current revenue levels, locking in content until they pay the right price.

But there is an antidote for publisher embargoes on immediate Green, and that is the immediate-institutional-deposit mandate plus the "Almost-OA" Request-a-Copy Button (the HEFCE/Liège model mandate), designating the deposit of the final refereed draft in the author's institutional repository immediately upon acceptance for publication as the sole mechanism for submitting publications for institutional performance review and for compliance with funding conditions.

Once those immediate-deposit mandates are universally adopted, universal OA will only be one keystroke away: The keystroke that sets access to an embargoed deposit as Open Access instead of Closed Access. With immediate-deposit ubiquitous, embargoes will very quickly die their inevitable and well-deserved deaths under the mounting global pressure for immediate OA (for which impatience will be all the more intensified by Button-based Almost-OA).

The scenario is speculative, to be sure, but grounded in the pragmatics, logic and evidence of what is actually going on today.

(Prepare for a vehement round of pseudo-legal publisher FUD about the copy-request Button as its adoption grows -- all groundless and ineffectual, but yet another attempt to delay the inevitable for as long as possible, by hook or by crook…)

Stevan Harnad

Harnad, S. (2007) The Green Road to Open Access: A Leveraged Transition. In: Anna Gacs. The Culture of Periodicals from the Perspective of the Electronic Age. L'Harmattan. 99-106.

______ (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8).

Hitchcock, S. (2013) The effect of open access and downloads ('hits') on citation impact: a bibliography of studies

Houghton, J. & Swan, A. (2013) Planting the Green Seeds for a Golden Harvest: Comments and Clarifications on "Going for Gold". D-Lib Magazine 19 (1/2).

Laakso, M & Björk, B-Ch (2013) Delayed open access. Journal of the American Society for Information Science and Technology 64(7): 1323–29

Rentier, B., & Thirion, P. (2011). The Liège ORBi model: Mandatory policy without rights retention but linked to assessment processes.

Sale, A., Couture, M., Rodrigues, E., Carr, L. and Harnad, S. (2012) Open Access Mandates and the "Fair Dealing" Button. In: Dynamic Fair Dealing: Creating Canadian Culture Online (Rosemary J. Coombe & Darren Wershler, Eds.)

Suber, P. (2012) Open Access. MIT Press.

References added in 2014:

Davis, P. (2013) Journal Usage Half-Life Association of American Publishers November 2013

Suber, P. (2014) What doesn't justify longer embargoes on publicly-funded research January 2014

Friday, August 19. 2011

Getting Excited About Getting Cited: No Need To Pay For OA

What the Gaulé & Maystre (G&M) (2011) article shows -- convincingly, in my opinion -- is that in the case of paid hybrid gold OA, most of the observed citation increase is better explained by the fact that the authors of articles that are more likely to be cited are also more likely to pay for hybrid gold OA. (The effect is even stronger when one takes into account the phase in the annual funding cycle when there is more money available to spend.)Gaulé, Patrick & Maystre, Nicolas (2011) Getting cited: Does open access help? Research Policy (in press)G & M: "Cross-sectional studies typically find positive correlations between free availability of scientific articles (‘open access’) and citations… Using instrumental variables, we find no evidence for a causal effect of open access on citations. We provide theory and evidence suggesting that authors of higher quality papers are more likely to choose open access in hybrid journals which offer an open access option. Self-selection mechanisms may thus explain the discrepancy between the positive correlation found in Eysenbach (2006) and other cross-sectional studies and the absence of such correlation in the field experiment of Davis et al. (2008)… Our results may not apply to other forms of open access beyond journals that offer an open access option. Authors increasingly self-archive either on their website or through institutional repositories. Studying the effect of that type of open access is a potentially important topic for future research..."

But whether or not to pay money for the OA is definitely not a consideration in the case of Green OA (self-archiving), which costs the author nothing. (The exceedingly low infrastructure costs of hosting Green OA repositories per article are borne by the institution, not the author: like the incomparably higher journal subscription costs, likewise borne by the institution, they are invisible to the author.)

I rather doubt that G & M's economic model translates into the economics of doing a few extra author keystrokes -- on top of the vast number of keystrokes already invested in keying in the article itself and in submitting and revising it for publication.

It is likely, however -- and we have been noting this from the very outset -- that one of the multiple factors contributing to the OA citation advantage (alongside the article quality factor, the article accessibility factor, the early accessibility factor, the competitive [OA vs non-OA] factor and the download factor) is indeed an author self-selection factor that contributes to the OA citation advantage.

What G & M have shown, convincingly, is that in the special case of having to pay for OA in a hybrid Gold Journal (PNAS: a high-quality journal that makes all articles OA on its website 6 months after publication), the article quality and author self-selection factors alone (plus the availability of funds in the annual funding cycle) account for virtually all the significant variance in the OA citation advantage: Paying extra to provide hybrid Gold OA during those first 6 months does not buy authors significantly more citations.

G & M correctly acknowledge, however, that neither their data nor their economic model apply to Green OA self-archiving, which costs the author nothing and can be provided for any article, in any journal (most of which are not made OA on the publisher's website 6 months after publication, as in the case of PNAS). Yet it is on Green OA self-archiving that most of the studies of the OA citation advantage (and the ones with the largest and most cross-disciplinary samples) are based.

I also think that because both citation counts and the OA citation advantage are correlated with article quality there is a potential artifact in using estimates of article or author quality as indicators of author self-selection effects: Higher quality articles are cited more, and the size of their OA advantage is also greater. Hence what would need to be done in a test of the self-selection advantage for Green OA would be to estimate article/author quality [but not from their citation counts, of course!] for a large sample and then -- comparing like with like -- to show that among articles/authors estimated to be at the same quality level, there is no significant difference in citation counts between individual articles (published in the same journal and year) that are and are not self-archived by their authors.

No one has done such a study yet -- though we have weakly approximated it (Gargouri et al 2010) using journal impact-factor quartiles. In our approximation, there remains a significant OA advantage even when comparing OA (self-archived) and non-OA articles (same journal/year) within the same quality-quartile. There is still room for a self-selection effect between and within journals within a quartile, however (a journal's impact factor is an average across its individual articles; PNAS, for example, is in the top quartile, but its individual articles still vary in their citation counts). So a more rigorous study would have to tighten up the quality equation much more closely). But my bet is that a significant OA advantage will be observed even when comparing like with like.

Stevan Harnad

EnablingOpenScholarship

Saturday, April 2. 2011

"The Sole Methodologically Sound Study of the Open Access Citation Advantage(!)"

It is true that downloads of research findings are important. They are being measured, and the evidence of the open-access download advantage is growing. See:

S. Hitchcock (2011) "The effect of open access and downloads ('hits') on citation impact: a bibliography of studies"But the reason it is the open-access citation advantage that is especially important is that refereed research is conducted and published so it can be accessed, used, applied and built upon in further research: Research is done by researchers, for uptake by researchers, for the benefit of the public that funds the research. Both research progress and researchers' careers and funding depend on research uptake and impact.

A. Swan (2010) "The Open Access citation advantage: Studies and results to date"

B. Wagner (2010) "Open Access Citation Advantage: An Annotated Bibliography"

The greatest growth potential for open access today is through open access self-archiving mandates adopted by the universal providers of research: the researchers' universities, institutions and funders (e.g., Harvard and MIT) . See the ROARMAP registry of open-access mandates.

Universities adopt open access mandates in order to maximize their research impact. The large body of evidence, in field after field, that open access increases citation impact, helps motivate universities to mandate open access self-archiving of their research output, to make it accessible to all its potential users -- rather than just those whose universities can afford subscription access -- so that all can apply, build upon and cite it. (Universities can only afford subscription access to a fraction of research journals.)

The Davis study lacks the statistical power to show what it purports to show, which is that the open access citation advantage is not causal, but merely an artifact of authors self-selectively self-archiving their better (hence more citable) papers. Davis's sample size was smaller than many of the studies reporting the open access citation advantage. Davis found no citation advantage for randomized open access. But that does not demonstrate that open access is a self-selection artifact -- in that study or any other study -- because Davis did not replicate the widely reported self-archiving advantage either, and that advantage is often based on far larger samples. So the Davis study is merely a small non-replication of a widely reported outcome. (There are a few other non-replications; but most of the studies to date replicate the citation advantage, especially those based on bigger samples.)

Davis says he does not see why the inferences he attempts to make from his results -- that the reported open access citation advantage is an artifact, eliminated by randomization, that there is hence no citation advantage, which implies that there is no research access problem for researchers, and that researchers should just content themselves with the open access download advantage among lay users and forget about any citation advantage -- are not welcomed by researchers.

These inferences are not welcomed because they are based on flawed methodology and insufficient statistical power and yet they are being widely touted -- particularly by the publishing industry lobby (see the spin FASEB is already trying to put on the Davis study: "Paid access to journal articles not a significant barrier for scientists"!) -- as being the sole methodologically sound test of the open access citation advantage! Ignore the many positive studies. They are all methodologically flawed. The definitive finding, from the sole methodologically sound study, is null. So there's no access problem, researchers have all the access they need -- and hence there's no need to mandate open access self-archiving.

No, this string of inferences is not a "blow to open access" -- but it would be if it were taken seriously.

What would be useful and opportune at this point would be meta-analysis.

Stevan Harnad

American Scientist Open Access Forum

EnablingOpenScholarship

The Sound of One Hand Clapping

Suppose many studies report that cancer incidence is correlated with smoking and you want to demonstrate in a methodologically sounder way that this correlation is not caused by smoking itself, but just an artifact of the fact that the same people who self-select to smoke are also the ones who are more prone to cancer. So you test a small sample of people randomly assigned to smoke or not, and you find no difference in their cancer rates. How can you know that your sample was big enough to detect the repeatedly reported correlation at all unless you test whether it's big enough to show that cancer incidence is significantly higher for self-selected smoking than for randomized smoking?

Many studies have reported a statistically significant increase in citations for articles whose authors make them OA by self-archiving them. To show that this citation advantage is not caused by OA but just a self-selection artifact (because authors selectively self-archive their better, more citeable papers), you first have to replicate the advantage itself, for the self-archived OA articles in your sample, and then show that that advantage is absent for the articles made OA at random. But Davis showed only that the citation advantage was absent altogether in his sample. The most likely reason for that is that the sample was much too small (36 journals, 712 articles randomly OA, 65 self-archived OA, 2533 non-OA).

In a recent study (Gargouri et al 2010) we controlled for self-selection using mandated (obligatory) OA rather than random OA. The far larger sample (1984 journals, 3055 articles mandatorily OA, 3664 self-archived OA, 20,982 non-OA) revealed a statistically significant citation advantage of about the same size for both self-selected and mandated OA.

If and when Davis's requisite self-selected self-archiving control is ever tested, the outcome will either be (1) the usual significant OA citation advantage in the self-archiving control condition that most other published studies have reported -- in which case the absence of the citation advantage in Davis's randomized condition would indeed be evidence that the citation advantage had been a self-selection artifact that was then successfully eliminated by the randomization -- or (more likely, I should think) (2) no significant citation advantage will be found in the self-archiving control condition either, in which case the Davis study will prove to have been just one non-replication of the usual significant OA citation advantage (perhaps because of Davis's small sample size, the fields, or the fact that most of the non-OA articles become OA on the journal's website after a year). (There have been a few other non-replications; but most studies replicate the OA citation advantage, especially the ones based on larger samples.)

Until that requisite self-selected self-archiving control is done, this is just the sound of one hand clapping.

Readers can be trusted to draw their own conclusions as to whether Davis's study, tirelessly touted as the only methodologically sound one to date, is that -- or an exercise in advocacy.

Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research (2010) PLOS ONE 5 (10) (authors: Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S.)

Thursday, March 31. 2011

On Methodology and Advocacy: Davis's Randomization Study of the OA Advantage

Open access, readership, citations: a randomized controlled trial of scientific journal publishing doi:10.1096/fj.11-183988fj.11-183988Sorry to disappoint! Nothing new to cut-and-paste or reply to:

Philip M. Davis: "Published today in The FASEB Journal we report the findings of our randomized controlled trial of open access publishing on article downloads and citations. This study extends a prior study of 11 journals in physiology (Davis et al, BMJ, 2008) reported at 12 months to 36 journals covering the sciences, social sciences and humanities at 3yrs. Our initial results are generalizable across all subject disciplines: open access increases article downloads but has no effect on article citations... You may expect a routine cut-and-paste reply by S.H. shortly... I see the world as a more complicated and nuanced place than through the lens of advocacy."

Still no self-selected self-archiving control, hence no basis for the conclusions drawn (to the effect that the widely reported OA citation advantage is merely an artifact of a self-selection bias toward self-archiving the better, hence more citeable articles -- a bias that the randomization eliminates). The methodological flaw, still uncorrected, has been pointed out before.

If and when the requisite self-selected self-archiving control is ever tested, the outcome will either be (1) the usual significant OA citation advantage in the self-archiving control condition that most other published studies have reported -- in which case the absence of the citation advantage in Davis's randomized condition would indeed be evidence that the citation advantage had been a self-selection artifact that was then successfully eliminated by the randomization -- or (more likely, I should think) (2) there will be no significant citation advantage in the self-archiving control condition either, in which case the Davis study will prove to have been just a non-replication of the usual significant OA citation advantage (perhaps because of Davis's small sample size, the fields, or the fact that most of the non-OA articles become OA on the journal's website after a year).

Until the requisite self-selected self-archiving control is done, this is just the sound of one hand clapping.

Readers can be trusted to draw their own conclusions as to whether this study, tirelessly touted as the only methodologically sound one to date, is that -- or an exercise in advocacy.

Stevan Harnad

American Scientist Open Access Forum

EnablingOpenScholarship

Wednesday, February 23. 2011

Critique of McCabe & Snyder: Online not= OA, and OA not= OA journal

Comments on:

Comments on: The following quotes are from McCabe, MJ (2011) Online access versus open access. Inside Higher Ed. February 10, 2011.McCabe, MJ & Snyder, CM (2011) Did Online Access to Journals Change the Economics Literature?

Abstract: Does online access boost citations? The answer has implications for issues ranging from the value of a citation to the sustainability of open-access journals. Using panel data on citations to economics and business journals, we show that the enormous effects found in previous studies were an artifact of their failure to control for article quality, disappearing once we add fixed effects as controls. The absence of an aggregate effect masks heterogeneity across platforms: JSTOR boosts citations around 10%; ScienceDirect has no effect. We examine other sources of heterogeneity including whether JSTOR benefits "long-tail" or "superstar" articles more.

MCCABE: …I thought it would be appropriate to address the issue that is generating some heat here, namely whether our results can be extrapolated to the OA environment….If "selection bias" refers to authors' bias toward selectively making their better (hence more citeable) articles OA, then this was controlled for in the comparison of self-selected vs. mandated OA, by Gargouri et al (2010) (uncited in the McCabe & Snyder (2011) [M & S] preprint, but known to the authors -- indeed the first author requested, and received, the entire dataset for further analysis: we are all eager to hear the results).

1. Selection bias and other empirical modeling errors are likely to have generated overinflated estimates of the benefits of online access (whether free or paid) on journal article citations in most if not all of the recent literature.

If "selection bias" refers to the selection of the journals for analysis, I cannot speak for studies that compare OA journals with non-OA journals, since we only compare OA articles with non-OA articles within the same journal. And it is only a few studies, like Evans and Reimer's, that compare citation rates for journals before and after they are made accessible online (or, in some cases, freely accessible online). Our principal interest is in the effects of immediate OA rather than delayed or embargoed OA (although the latter may be of interest to the publishing community).

MCCABE: 2. There are at least 2 “flavors” found in this literature: 1. papers that use cross-section type data or a single observation for each article (see for example, Lawrence (2001), Harnad and Brody (2004), Gargouri, et. al. (2010)) and 2. papers that use panel data or multiple observations over time for each article (e.g. Evans (2008), Evans and Reimer (2009)).We cannot detect any mention or analysis of the Gargouri et al. paper in the M & S paper…

MCCABE: 3. In our paper we reproduce the results for both of these approaches and then, using panel data and a robust econometric specification (that accounts for selection bias, important secular trends in the data, etc.), we show that these results vanish.We do not see our results cited or reproduced. Does "reproduced" mean "simulated according to an econometric model"? If so, that is regrettably too far from actual empirical findings to be anything but speculations about what would be found if one were actually to do the empirical studies.

MCCABE: 4. Yes, we “only” test online versus print, and not OA versus online for example, but the empirical flaws in the online versus print and the OA versus online literatures are fundamentally the same: the failure to properly account for selection bias. So, using the same technique in both cases should produce similar results.Unfortunately this is not very convincing. Flaws there may well be in the methodology of studies comparing citation counts before and after the year in which a journal goes online. But these are not the flaws of studies comparing citation counts of articles that are and are not made OA within the same journal and year.

Nor is the vague attribution of "failure to properly account for selection bias" very convincing, particularly when the most recent study controlling for selection bias (by comparing self-selected OA with mandated OA) has not even been taken into consideration.

Conceptually, the reason the question of whether online access increases citations over offline access is entirely different from the question of whether OA increases citations over non-OA is that (as the authors note), the online/offline effect concerns ease of access: Institutional users have either offline access or online access, and, according to M & S's results, in economics, the increased ease of accessing articles online does not increase citations.

This could be true (although the growth across those same years of the tendency in economics to make prepublication preprints OA [harvested by RepEc] through author self-archiving, much as the physicists had started doing a decade earlier in Arxiv, and computer scientists started doing even earlier [later harvested by Citeseerx] could be producing a huge background effect not taken into account at all in M & S's painstaking temporal analysis (which itself appears as an OA preprint in SSRN!).

But any way one looks at it, there is an enormous difference between comparing easy vs. hard access (online vs. offline) and comparing access with no access. For when we compare OA vs non-OA, we are taking into account all those potential users that are at institutions that cannot afford subscriptions (whether offline or online) to the journal in which an article appears. The barrier, in other words (though one should hardly have to point this out to economists), is not an ease barrier but a price barrier: For users at nonsubscribing institutions, non-OA articles are not just harder to access: They are impossible to access -- unless a price is paid.

(I certainly hope that M & S will not reply with "let them use interlibrary loan (ILL)"! A study analogous to M & S's online/offline study comparing citations for offline vs. online vs. ILL access in the click-through age would not only strain belief if it too found no difference, but it too would fail to address OA, since OA is about access when one has reached the limits of one's institution's subscription/license/pay-per-view budget. Hence it would again miss all the usage and citations that an article would have gained if it had been accessible to all its potential users and not just to those whose institutions could afford access, by whatever means.)

It is ironic that M & S draw their conclusions about OA in economic terms (and, predictably, as their interest is in modelling publication economics) in terms of the cost/benefits, for an author, of paying to publish in an OA journal. concluding that since they have shown it will not generate more citations, it is not worth the money.

But the most compelling findings on the OA citation advantage come from OA author self-archiving (of articles published in non-OA journals), not from OA journal publishing. Those are the studies that show the OA citation advantage, and the advantage does not cost the author a penny! (The benefits, moreover, accrue not only to authors and users, but to their institutions too, as the economic analysis of Houghton et al shows.)

And the extra citations resulting from OA are almost certainly coming from users for whom access to the article would otherwise have been financially prohibitive. (Perhaps it's time for more econometric modeling from the user's point of view too…)

I recommend that M & S look at the studies of Michael Kurtz in astrophysics. Those, too, included sophisticated long-term studies of the effect of the wholesale switch from offline to online, and Kurtz found that total citations were in fact slightly reduced, overall, when journals became accessible online! But astrophysics, too, is a field in which OA self-archiving is widespread. Hence whether and when journals go online is moot, insofar as citations are concerned.

(The likely hypothesis for the reduced citations -- compatible also with our own findings in Gargouri et al -- is that OA levels the playing field for users: OA articles are accessible to all their potential usesr, not just to those whose institutions can afford toll access. As a result, users can self-selectively decide to cite only the best and most relevant articles of all, rather than having to make do with a selection among only the articles to which their institutions can afford toll access. One corollary of this [though probably also a spinoff of the Seglen/Pareto effect] is that the biggest beneficiaries of the OA citation advantage will be the best articles. This is a user-end -- rather than an author-end -- selection effect...)

MCCABE: 5. At least in the case of economics and business titles, it is not even possible to properly test for an independent OA effect by specifically looking at OA journals in these fields since there are almost no titles that switched from print/online to OA (I can think of only one such title in our sample that actually permitted backfiles to be placed in an OA repository). Indeed, almost all of the OA titles in econ/business have always been OA and so no statistically meaningful before and after comparisons can be performed.The multiple conflation here is so flagrant that it is almost laughable. Online ≠ OA and OA ≠ OA journal.

First, the method of comparing the effect on citations before vs. after the offline/online switch will have to make do with its limitations. (We don't think it's of much use for studying OA effects at all.) The method of comparing the effect on citations of OA vs. non-OA within the same (economics/business, toll-access) journals can certainly proceed apace in those disciplines, the studies have been done, and the results are much the same as in other disciplines.

M & S have our latest dataset: Perhaps they would care to test whether the economics/business subset of it is an exception to our finding that (a) there is a significant OA advantage in all disciplines, and (b) it's just as big when the OA is mandated as when it is self-selected.

MCCABE: 6. One alternative, in the case of cross-section type data, is to construct field experiments in which articles are randomly assigned OA status (e.g. Davis (2008) employs this approach and reports no OA benefit).And another one -- based on an incomparably larger N, across far more fields -- is the Gargouri et al study that M & S fail to mention in their article, in which articles are mandatorily assigned OA status, and for which they have the full dataset in hand, as requested.

MCCABE: 7. Another option is to examine articles before and after they were placed in OA repositories, so that the likely selection bias effects, important secular trends, etc. can be accounted for (or in economics jargon, “differenced out”). Evans and Reimer’s attempt to do this in their 2009 paper but only meet part of the econometric challenge.M & S are rather too wedded to their before/after method and thinking! The sensible time for authors to self-archive their papers is immediately upon acceptance for publication. That's before the published version has even appeared. Otherwise one is not studying OA but OA embargo effects. (But let me agree on one point: Unlike journal publication dates, OA self-archiving dates are not always known or taken into account; so there may be some drift there, depending on when the author self-archives. The solution is not to study the before/after watershed, but to focus on the articles that are self-archived immediately rather than later.)

Stevan Harnad

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 5 (10). e13636

Harnad, S. (2010) The Immediate Practical Implication of the Houghton Report: Provide Green Open Access Now. Prometheus 28 (1): 55-59.

Wednesday, October 20. 2010

Correlation, Causation, and the Weight of Evidence

Jennifer Howard ("Is there an Open-Access Advantage?," Chronicle of Higher Education, October 19 2010) seems to have missed the point of our article. It is undisputed that study after study has found that Open Access (OA) is correlated with higher probability of citation. The question our study addressed was whether making an article OA causes the higher probability of citation, or the higher probability causes the article to be made OA.SUMMARY: One can only speculate on the reasons why some might still wish to cling to the self-selection bias hypothesis in the face of all the evidence to date. It seems almost a matter of common sense that making articles more accessible to users also makes them more usable and citable -- especially in a world where most researchers are familiar with the frustration of arriving at a link to an article that they would like to read (but their institution does not subscribe), so they are asked to drop it into the shopping cart and pay $30 at the check-out counter. The straightforward causal relationship is the default hypothesis, based on both plausibility and the cumulative weight of the evidence. Hence the burden of providing counter-evidence to refute it is now on the advocates of the alternative.

The latter is the "author self-selection bias" hypothesis, according to which the only reason OA articles are cited more is that authors do not make all articles OA: only the better ones, the ones that are also more likely to be cited.

But almost no one finds that OA articles are cited more a year after publication. The OA citation advantage only becomes statistically detectable after citations have accumulated for 2-3 years.

Even more important, Davis et al. did not test the obvious and essential control condition in their randomized OA experiment: They did not test whether there was a statistically detectable OA advantage for self-selected OA in the same journals and time-window. You cannot show that an effect is an artifact of self-selection unless you show that with self-selection the effect is there, whereas with randomization it is not. All Davis et al showed was that there is no detectable OA advantage at all in their one-year sample (247 articles from 11 Biology journals); randomness and self-selection have nothing to do with it.

Davis et al released their results prematurely. We are waiting*,** to hear what Davis finds after 2-3 years, when he completes his doctoral dissertation. But if all he reports is that he has found no OA advantage at all in that sample of 11 biology journals, and that interval, rather than an OA advantage for the self-selected subset and no OA advantage for the randomized subset, then again, all we will have is a failure to replicate the positive effect that has now been reported by many other investigators, in field after field, often with far larger samples than Davis et al's.

Meanwhile, our study was similar to that of Davis et al's, except that it was a much bigger sample, across many fields, and a much larger time window -- and, most important, we did have a self-selective matched-control subset, which did show the usual OA advantage. Instead of comparing self-selective OA with randomized OA, however, we compared it with mandated OA -- which amounts to much the same thing, because the point of the self-selection hypothesis is that the author picks and chooses what to make OA, whereas if the OA is mandatory (required), the author is not picking and choosing, just as the author is not picking and choosing when the OA is imposed randomly.Davis's results are welcome and interesting, and include some good theoretical insights, but insofar as the OA Citation Advantage is concerned, the empirical findings turn out to be just a failure to replicate the OA Citation Advantage in that particular sample and time-span -- exactly as predicted above. The original 2008 sample of 247 OA and 1372 non-OA articles in 11 journals one year after publication has now been extended to 712 OA and 2533 non-OA articles in 36 journals two years after publication. The result is a significant download advantage for OA articles but no significant citation advantage.

*Note added October 31, 2010: Davis's dissertation turns out to have been posted on the same day as the present posting (October 20; thanks to Les Carr for drawing this to my attention on October 24!).

**Note added November 24, 2010: Phil Davis's results -- a replication of the OA download advantage and a non-replication of the OA citation advantage -- have since been published as: Davis, P. (2010) Does Open Access Lead to Increased Readership and Citations? A Randomized Controlled Trial of Articles Published in APS Journals. The Physiologist 53(6) December 2010.

The only way to describe this outcome is as a non-replication of the OA Citation Advantage on this particular sample; it is most definitely not a demonstration that the OA Advantage is an artifact of self-selection, since there is no control group demonstrating the presence of the citation advantage with self-selected OA and the absence of the citation advantage with randomized OA across the same sample and time-span: There is simply the failure to detect any citation advantage at all.

This failure to replicate is almost certainly due to the small sample size as well as the short time-span. (Davis's a-priori estimates of the sample size required to detect a 20% difference took no account of the fact that citations grow with time; and the a-priori criterion fails even to be met for the self-selected subsample of 65.)

"I could not detect the effect in a much smaller and briefer sample than others" is hardly news! Compare the sample size of Davis's negative results with the sample-sizes and time-spans of some of the studies that found positive results:

And our finding is that the mandated OA advantage is just as big as the self-selective OA advantage.

As we discussed in our article, if someone really clings to the self-selection hypothesis, there are some remaining points of uncertainty in our study that self-selectionists can still hope will eventually bear them out: Compliance with the mandates was not 100%, but 60-70%. So the self-selection hypothesis has a chance of being resurrected if one argues that now it is no longer a case of positive selection for the stronger articles, but a refusal to comply with the mandate for the weaker ones. One would have expected, however, that if this were true, the OA advantage would at least be weaker for mandated OA than for unmandated OA, since the percentage of total output that is self-archived under a mandate is almost three times the 5-25% that is self-archived self-selectively. Yet the OA advantage is undiminished with 60-70% mandate compliance in 2002-2006. We have since extended the window by three more years, to 2009; the compliance rate rises by another 10%, but the mandated OA advantage remains undiminished. Self-selectionists don't have to cede till the percentage is 100%, but their hypothesis gets more and more far-fetched...

The other way of saving the self-selection hypothesis despite our findings is to argue that there was a "self-selection" bias in terms of which institutions do and do not mandate OA: Maybe it's the better ones that self-select to do so. There may be a plausible case to be made that one of our four mandated institutions -- CERN -- is an elite institution. (It is also physics-only.) But, as we reported, we re-did our analysis removing CERN, and we got the same outcome. Even if the objection of eliteness is extended to Southampton ECS, removing that second institution did not change the outcome either. We leave it to the reader to decide whether it is plausible to count our remaining two mandating institutions -- University of Minho in Portugal and Queensland University of Technology in Australia -- as elite institutions, compared to other universities. It is a historical fact, however, that these four institutions were the first in the world to elect to mandate OA.

One can only speculate on the reasons why some might still wish to cling to the self-selection bias hypothesis in the face of all the evidence to date. It seems almost a matter of common sense that making articles more accessible to users also makes them more usable and citable -- especially in a world where most researchers are familiar with the frustration of arriving at a link to an article that they would like to read (but their institution does not subscribe), so they are asked to drop it into the shopping cart and pay $30 at the check-out counter. The straightforward causal relationship is the default hypothesis, based on both plausibility and the cumulative weight of the evidence. Hence the burden of providing counter-evidence to refute it is now on the advocates of the alternative.

Davis, PN, Lewenstein, BV, Simon, DH, Booth, JG, & Connolly, MJL (2008) Open access publishing, article downloads, and citations: randomised controlled trial , British Medical Journal 337: a568

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 10(5) e13636

Harnad, S. (2008) Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion. Open Access Archivangelism July 31 2008

Tuesday, October 19. 2010

Comparing OA and Non-OA: Some Methodological Supplements

Response to Martin Fenner's comments on Gargouri Y, Hajjem C, Larivière V, Gingras Y, Carr L, Brody T, Harnad S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLoS ONE 5(10):e13636+. doi:10.1371/journal.pone.0013636.

Response to Martin Fenner's comments on Gargouri Y, Hajjem C, Larivière V, Gingras Y, Carr L, Brody T, Harnad S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLoS ONE 5(10):e13636+. doi:10.1371/journal.pone.0013636.(1) Yes, we cited the Davis et al study. That study does not show that the OA citation advantage is a result of self-selection bias. It simply shows (as many other studies have noted) that no OA advantage at all (whether randomized or self-selected) is detectable only a year after publication, especially in a small sample. It's since been over two years and we're still waiting to hear whether Davis et al's randomized sample still has no OA advantage while a self-selected control sample from the same journals and year does. That would be the way to show what the OA advantage is a self-selection bias. Otherwise it's just the sound of one hand clapping.Harnad, S (2008) Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion. Open Access Archivangelism. July 31 2008.(2) No, we did not look only at self-archiving in institutional repositories. Our matched-control sample of self-selected self-archived articles came from institutional repositories, central repositories, and authors' websites. (All of that is "Green OA.") It was only the mandated sample that was exclusively from institutional repositories. (Someone else may wish to replicate our study using funder-mandated self-archiving in central repositories. The results are likely to be much the same, but the design and analysis would be rather more complicated.)

(4) Yes, we systematically excluded articles in Gold OA journals from our sample, not because we do not believe that they generate the OA advantage too, but because it is impossible to do matched-control comparisons between OA and non-OA articles in the same journal issue with Gold OA journals, since all their articles are OA. (It would for much the same reason be difficult to do this comparison in a field where 100% of the articles were OA, even if we were interested in unrefereed preprints; but we were not: we were interested in open access to refereed journal articles.)

(5) As to the 60% mandated self-archiving rates: The institutions we studied had mandated OA in 2003-2004. Our test time-span was 2002-2006. At least two of those institutions (Southampton ECS and CERN) and probably the other two also (Minho and QUT) have deposit rates of close to 100% by now. (We have since extended the analyses to 2009 and found exactly the same result.)

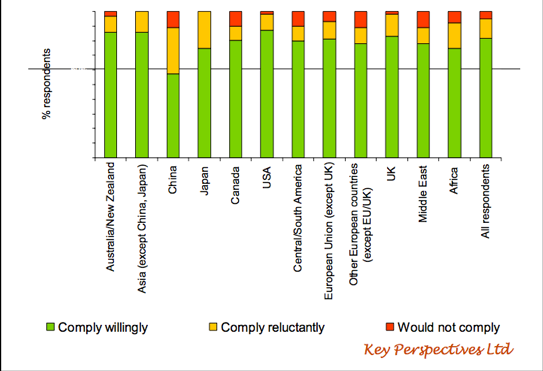

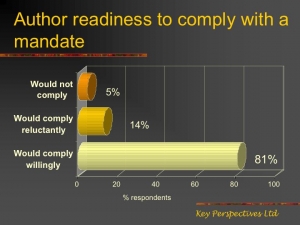

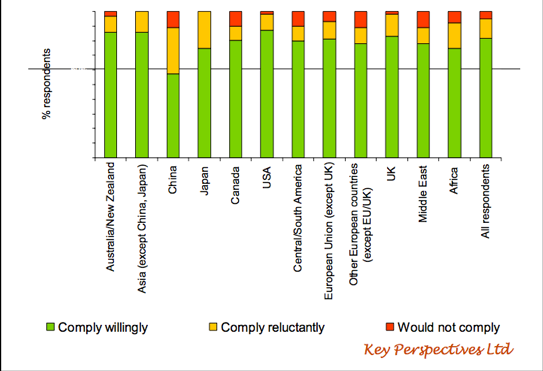

(6) "What is wrong if [OA] rates are 15%"? We leave that to the reader as an exercise. That, after all, is what OA is all about. But surveys have shown -- and outcome studies have confirmed -- that although most researchers do not self-archive spontaneously, 95% report that they would self-archive if their institutions or funders required it, over 80% of them saying they would do it willingly. (Most don't self-archive spontaneously because of worries -- groundless worries -- that it might be illegal or might entail a lot of work.)

(7) Yes, "there are many reasons other than citation rates that make OA worthwhile," but if most researchers will only provide OA if it is mandated, then it is important to demonstrate to researchers why it is worth their while.

(8) If we have given "the impression that mandatory self-archiving of post-prints in institutional repositories is the only reasonable Open Access strategy," then we have succeeded in conveying the implication of our findings.

Swan, A. (2006) The culture of Open Access: researchers’ views and responses, in Jacobs, Neil, Eds. Open Access: Key Strategic, Technical and Economic Aspects. Chandos Publishing (Oxford) Limited.

Tuesday, August 10. 2010

Mismeasure of the Needs of Research, Researchers, Universities and Funders

Phil Davis continues his inexplicable preoccupation with what's best for the publishing industry, at the expense of what is best for research, researchers, their institutions, their funders, and the tax-paying public that funds them -- the ones by and for whom the research funded.

Phil Davis continues his inexplicable preoccupation with what's best for the publishing industry, at the expense of what is best for research, researchers, their institutions, their funders, and the tax-paying public that funds them -- the ones by and for whom the research funded.Phil's latest point is that the low uptake by authors of committed Gold OA funds indicates that authors don't really want OA.

But authors themselves have responded quite clearly, and repeatedly, in Alma Swan's international surveys, that they do need, want, and value OA. Yet they also state that they will only provide OA to their own writings if their institutions and funders require (i.e., mandate) them to provide it.

But authors themselves have responded quite clearly, and repeatedly, in Alma Swan's international surveys, that they do need, want, and value OA. Yet they also state that they will only provide OA to their own writings if their institutions and funders require (i.e., mandate) them to provide it.(There is definitely a paradox here, but it is not resolved by simply assuming that authors don't really want OA! It's rather more complicated than that. Authors would not publish much, either, if their institutions and funders did not require them to "publish or perish." And without that, where would the publishing industry be?)

I have dubbed the condition "Zeno's Paralysis," which is the fact that for a variety of reasons (38 at last count) -- all groundless and easily defeasible but relentlessly recurrent nonetheless, including worries about copyright, worries about getting published, worries about peer review, and even worries about the time and effort it might require to provide OA -- most authors will not provide OA spontaneously. And the cure is not only known, but has already been administered by over 150 institutions and funders: Mandate OA.

And the only form of OA that institutions and funders can mandate is Green OA self-archiving of all peer-reviewed journal articles, immediately upon acceptance for publication.

Apart from that, all they can do is provide some of their scarce funds (largely tied up in paying for subscriptions) to pay for a little Gold OA publishing. The uptake for that is even lower than for unmandated Green OA self-archiving, but that's certainly not evidence against Alma Swan's survey findings about what authors want, and what it will take to get them to provide it (and Arthur Sale's data confirming that authors actually do as they say they will do, if mandated).

Ironically, the book Phil cites -- Stephen Jay Gould's "Mismeasure of Man" -- was also a critique, some of it well-taken, but likewise tainted by an ideological bias. In Gould's case the ideological bias was far, far more noble than Phil's parochial bias in favor of publishers' interests and the status quo: Gould was an idealistic marxist whose bias was against evidence indicating that there are genetic differences between human populations. In the core chapter of that book -- "The Real Error of Cyril Burt" -- Gould tried to show that psychometricians had made a methodological and conceptual error in interpreting the "G" factor -- a strong positive common factor that kept being observed among multiple, diverse psychometric tests across diverse populations -- as if it were an empirical finding, whereas (according to Gould) it was merely an inevitable result of their method of analysis: the "positive-definite manifold" that invariably results from the construction of a lot of positively correlated tests. (What Gould missed was that there was nothing about the analytic method that guaranteed that the joint correlations should all be positive, and certainly not that the resultant G factor should turn out, as it did, to be correlated with genetic differences between populations.)

The consensus in the field of psychometrics and biometrics today is that in this case the real error was Gould's.

Wednesday, March 17. 2010

Update on Meta-Analysis of Studies on Open Access Impact Advantage

In announcing Alma Swan's Review of Studies on the Open Access Impact Advantage, I had suggested that the growing number of studies on the OA Impact Advantage were clearly ripe for a meta-analysis. Here is an update:

David Wilson wrote:

"Interesting discussion. Phil Davis has a limited albeit common view of meta-analysis. Within medicine, meta-analysis is generally applied to a small set of highly homogeneous studies. As such, the focus is on the overall or pooled effect with only a secondary focus on variability in effects. Within the social sciences, there is a strong tradition of meta-analyzing fairly heterogeneous sets of studies. The focus is clearly not on the overall effect, which would be rather meaningless, but rather on the variability in effect and the study characteristics, both methodological and substantive, that explain that variability.

"I don't know enough about this area to ascertain the credibility of [Phil Davis's] criticism of the methodologies of the various studies involved. However, the one study that [Phil Davis] claims is methodologically superior in terms of internal validity (which it might be) is clearly deficient in statistical power. As such, it provides only a weak test. Recall, that a statistically nonsignificant finding is a weak finding -- a failure to reject the null and not acceptance of the null.

"Meta-analysis could be put to good use in this area. It won't resolve the issue of whether the studies that Davis thinks are flawed are in fact flawed. It could explore the consistency in effect across these studies and whether the effect varies by the method used. Both would add to the debate on this issue."Lipsey, MW & Wilson DB (2001) Practical Meta-Analysis. Sage.David B. Wilson, Ph.D.

Associate Professor

Chair, Administration of Justice Department

George Mason University

10900 University Boulevard, MS 4F4

Manassas, VA 20110-2203

Added Mar 15 2010

See also (thanks to Peter Suber for spotting this study!):

Wagner, A. Ben (2010) Open Access Citation Advantage: An Annotated Bibliography. Issues in Science and Technology Librarianship. 60. Winter 2010On Mar 12, 2010 Gene V Glass wrote the following:

"Far more issues about OA and meta analysis have been raised in this thread for me to [be able to] comment on. But having dedicated 35 years of my efforts to meta analysis and 20 to OA, I can’t resist a couple of quick observations.

Holding up one set of methods (be they RCT or whatever) as the gold standard is inconsistent with decades of empirical work in meta analysis that shows that “perfect studies” and “less than perfect studies” seldom show important differences in results. If the question at hand concerns experimental intervention, then random assignment to groups may well be inferior as a matching technique to even an ex post facto matching of groups. Randomization is not the royal road to equivalence of groups; it’s the road to probability statements about differences.

Claims about the superiority of certain methods are empirical claims. They are not a priori dicta about what evidence can and can not be looked at."Glass, G.V.; McGaw, B.; & Smith, M.L. (1981). Meta-analysis in Social Research. Beverly Hills, CA: SAGE.

Rudner, Lawrence, Gene V Glass, David L. Evartt, and Patrick J. Emery (2000). A user's guide to the meta-analysis of research studies. ERIC Clearinghouse on Assessment and Evaluation, University of Maryland, College Park.

GVG's Publications

(Page 1 of 3, totaling 26 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made